* export end2end onnx model * fixbug * add web demo (#58) * Update README.md * main code update yolov7-tiny deploy cfg * main code update yolov7-tiny training cfg * main code @liguagua752109150 https://github.com/WongKinYiu/yolov7/issues/33#issuecomment-1178669212 * main code @albertfaromatics https://github.com/WongKinYiu/yolov7/issues/35#issuecomment-1178800685 * main code update link * main code add custom hyp * main code update default activation function * main code update path * main figure add more tasks * main code update readme * main code update reparameterization * Update README.md * main code update readme * main code update aux training * main code update aux training * main code update aux training * main figure update yolov7 prediction * main code update readme * main code rename * main code rename * main code rename * main code rename * main code update readme * main code update visualization * main code fix gain for train_aux * main code update loss * main code update instance segmentation demo * main code update keypoint detection demo * main code update pose demo * main code update pose * main code update pose * main code update pose * main code update pose * main code update trace * Update README.md * main code fix ciou * main code fix nan of aux training https://github.com/WongKinYiu/yolov7/issues/250#issue-1312356380 @hudingding * support onnx to tensorrt convert (#114) * fuse IDetect (#148) * Fixes #199 (#203) * minor fix * resolve conflict * resolve conflict * resolve conflict * resolve conflict * resolve conflict * resolve * resolve * resolve * resolve Co-authored-by: AK391 <81195143+AK391@users.noreply.github.com> Co-authored-by: Alexey <AlexeyAB@users.noreply.github.com> Co-authored-by: Kin-Yiu, Wong <102582011@cc.ncu.edu.tw> Co-authored-by: linghu8812 <36389436+linghu8812@users.noreply.github.com> Co-authored-by: Alexander <84590713+SashaAlderson@users.noreply.github.com> Co-authored-by: Ben Raymond <ben@theraymonds.org> Co-authored-by: AlexeyAB84 <alexeyab84@gmail.com>

Official YOLOv7

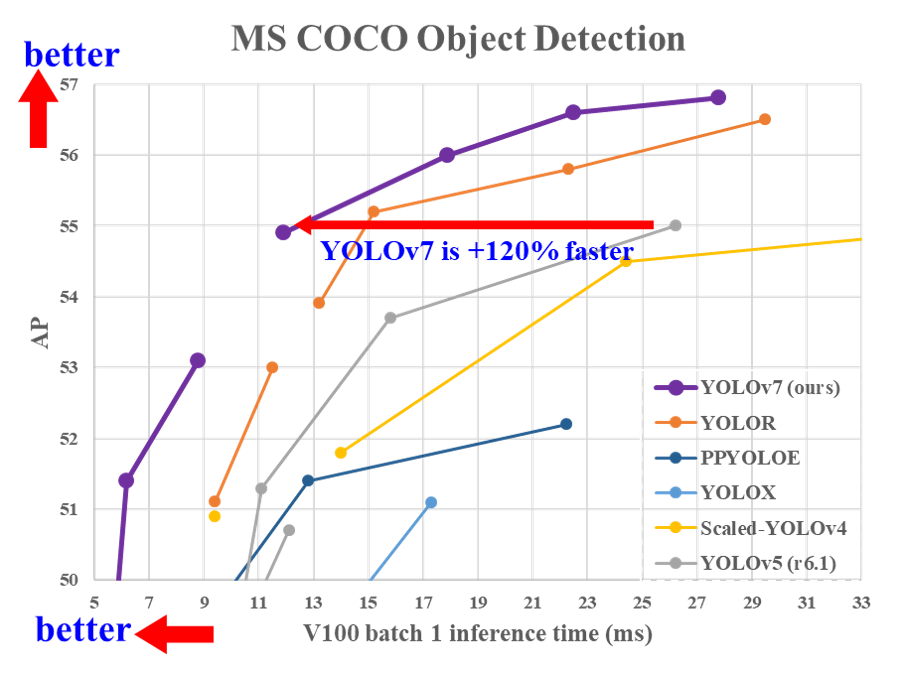

Implementation of paper - YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors

Web Demo

- Integrated into Huggingface Spaces 🤗 using Gradio. Try out the Web Demo

Performance

MS COCO

| Model | Test Size | APtest | AP50test | AP75test | batch 1 fps | batch 32 average time |

|---|---|---|---|---|---|---|

| YOLOv7 | 640 | 51.4% | 69.7% | 55.9% | 161 fps | 2.8 ms |

| YOLOv7-X | 640 | 53.1% | 71.2% | 57.8% | 114 fps | 4.3 ms |

| YOLOv7-W6 | 1280 | 54.9% | 72.6% | 60.1% | 84 fps | 7.6 ms |

| YOLOv7-E6 | 1280 | 56.0% | 73.5% | 61.2% | 56 fps | 12.3 ms |

| YOLOv7-D6 | 1280 | 56.6% | 74.0% | 61.8% | 44 fps | 15.0 ms |

| YOLOv7-E6E | 1280 | 56.8% | 74.4% | 62.1% | 36 fps | 18.7 ms |

Installation

Docker environment (recommended)

Expand

# create the docker container, you can change the share memory size if you have more.

nvidia-docker run --name yolov7 -it -v your_coco_path/:/coco/ -v your_code_path/:/yolov7 --shm-size=64g nvcr.io/nvidia/pytorch:21.08-py3

# apt install required packages

apt update

apt install -y zip htop screen libgl1-mesa-glx

# pip install required packages

pip install seaborn thop

# go to code folder

cd /yolov7

Testing

yolov7.pt yolov7x.pt yolov7-w6.pt yolov7-e6.pt yolov7-d6.pt yolov7-e6e.pt

python test.py --data data/coco.yaml --img 640 --batch 32 --conf 0.001 --iou 0.65 --device 0 --weights yolov7.pt --name yolov7_640_val

You will get the results:

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.51206

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.69730

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.55521

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.35247

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.55937

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.66693

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.38453

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.63765

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.68772

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.53766

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.73549

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.83868

To measure accuracy, download COCO-annotations for Pycocotools.

Training

Data preparation

bash scripts/get_coco.sh

- Download MS COCO dataset images (train, val, test) and labels. If you have previously used a different version of YOLO, we strongly recommend that you delete

train2017.cacheandval2017.cachefiles, and redownload labels

Single GPU training

# train p5 models

python train.py --workers 8 --device 0 --batch-size 32 --data data/coco.yaml --img 640 640 --cfg cfg/training/yolov7.yaml --weights '' --name yolov7 --hyp data/hyp.scratch.p5.yaml

# train p6 models

python train_aux.py --workers 8 --device 0 --batch-size 16 --data data/coco.yaml --img 1280 1280 --cfg cfg/training/yolov7-w6.yaml --weights '' --name yolov7-w6 --hyp data/hyp.scratch.p6.yaml

Multiple GPU training

# train p5 models

python -m torch.distributed.launch --nproc_per_node 4 --master_port 9527 train.py --workers 8 --device 0,1,2,3 --sync-bn --batch-size 128 --data data/coco.yaml --img 640 640 --cfg cfg/training/yolov7.yaml --weights '' --name yolov7 --hyp data/hyp.scratch.p5.yaml

# train p6 models

python -m torch.distributed.launch --nproc_per_node 8 --master_port 9527 train_aux.py --workers 8 --device 0,1,2,3,4,5,6,7 --sync-bn --batch-size 128 --data data/coco.yaml --img 1280 1280 --cfg cfg/training/yolov7-w6.yaml --weights '' --name yolov7-w6 --hyp data/hyp.scratch.p6.yaml

Transfer learning

yolov7_training.pt yolov7x_training.pt yolov7-w6_training.pt yolov7-e6_training.pt yolov7-d6_training.pt yolov7-e6e_training.pt

Single GPU finetuning for custom dataset

# finetune p5 models

python train.py --workers 8 --device 0 --batch-size 32 --data data/custom.yaml --img 640 640 --cfg cfg/training/yolov7-custom.yaml --weights 'yolov7_training.pt' --name yolov7-custom --hyp data/hyp.scratch.custom.yaml

# finetune p6 models

python train_aux.py --workers 8 --device 0 --batch-size 16 --data data/custom.yaml --img 1280 1280 --cfg cfg/training/yolov7-w6-custom.yaml --weights 'yolov7-w6_training.pt' --name yolov7-w6-custom --hyp data/hyp.scratch.custom.yaml

Re-parameterization

Pose estimation

See keypoint.ipynb.

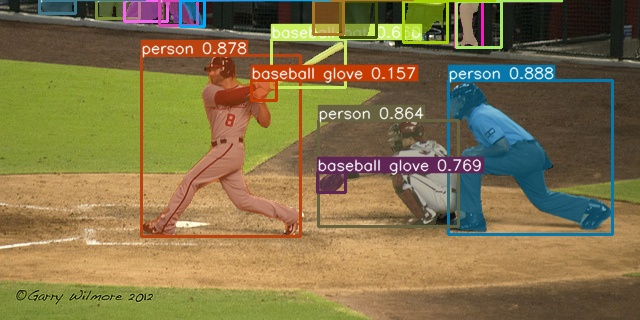

Inference

On video:

python detect.py --weights yolov7.pt --conf 0.25 --img-size 640 --source yourvideo.mp4

On image:

``` bash

python detect.py --weights yolov7.pt --conf 0.25 --img-size 640 --source inference/images/horses.jpg

Export

Use the args --include-nms can to export end to end onnx model which include the EfficientNMS.

python models/export.py --weights yolov7.pt --grid --include-nms

Citation

@article{wang2022yolov7,

title={{YOLOv7}: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors},

author={Wang, Chien-Yao and Bochkovskiy, Alexey and Liao, Hong-Yuan Mark},

journal={arXiv preprint arXiv:2207.02696},

year={2022}

}

Teaser

Yolov7-mask & YOLOv7-pose

Acknowledgements

Expand

- https://github.com/AlexeyAB/darknet

- https://github.com/WongKinYiu/yolor

- https://github.com/WongKinYiu/PyTorch_YOLOv4

- https://github.com/WongKinYiu/ScaledYOLOv4

- https://github.com/Megvii-BaseDetection/YOLOX

- https://github.com/ultralytics/yolov3

- https://github.com/ultralytics/yolov5

- https://github.com/DingXiaoH/RepVGG

- https://github.com/JUGGHM/OREPA_CVPR2022

- https://github.com/TexasInstruments/edgeai-yolov5/tree/yolo-pose

Description

This repository contains code for detecting heat pipes in the greenhouse as well as estimating the pose of the pipes

Languages

Jupyter Notebook

93.5%

Python

4.8%

C++

1.6%