* YOLOv3 updates * Add missing files * Reformat * [pre-commit.ci] auto fixes from pre-commit.com hooks for more information, see https://pre-commit.ci * Reformat * Reformat * Reformat * Reformat * [pre-commit.ci] auto fixes from pre-commit.com hooks for more information, see https://pre-commit.ci --------- Co-authored-by: pre-commit-ci[bot] <66853113+pre-commit-ci[bot]@users.noreply.github.com>

545 lines

39 KiB

Markdown

545 lines

39 KiB

Markdown

<div align="center">

|

|

<p>

|

|

<a align="center" href="https://ultralytics.com/yolov5" target="_blank">

|

|

<img width="100%" src="https://raw.githubusercontent.com/ultralytics/assets/main/yolov5/v70/splash.png"></a>

|

|

</p>

|

|

|

|

[English](README.md) | [简体中文](README.zh-CN.md)

|

|

<br>

|

|

|

|

<div>

|

|

<a href="https://github.com/ultralytics/yolov3/actions/workflows/ci-testing.yml"><img src="https://github.com/ultralytics/yolov3/actions/workflows/ci-testing.yml/badge.svg" alt=" CI"></a>

|

|

<a href="https://zenodo.org/badge/latestdoi/264818686"><img src="https://zenodo.org/badge/264818686.svg" alt=" Citation"></a>

|

|

<a href="https://hub.docker.com/r/ultralytics/yolov3"><img src="https://img.shields.io/docker/pulls/ultralytics/yolov3?logo=docker" alt="Docker Pulls"></a>

|

|

<br>

|

|

<a href="https://bit.ly/yolov5-paperspace-notebook"><img src="https://assets.paperspace.io/img/gradient-badge.svg" alt="Run on Gradient"></a>

|

|

<a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a>

|

|

<a href="https://www.kaggle.com/ultralytics/yolov5"><img src="https://kaggle.com/static/images/open-in-kaggle.svg" alt="Open In Kaggle"></a>

|

|

</div>

|

|

<br>

|

|

|

|

🚀 is the world's most loved vision AI, representing <a href="https://ultralytics.com">Ultralytics</a> open-source

|

|

research into future vision AI methods, incorporating lessons learned and best practices evolved over thousands of hours

|

|

of research and development.

|

|

|

|

To request an Enterprise License please complete the form at <a href="https://ultralytics.com/license">Ultralytics

|

|

Licensing</a>.

|

|

|

|

<div align="center">

|

|

<a href="https://github.com/ultralytics" style="text-decoration:none;">

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-github.png" width="2%" alt="" /></a>

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

|

<a href="https://www.linkedin.com/company/ultralytics" style="text-decoration:none;">

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-linkedin.png" width="2%" alt="" /></a>

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

|

<a href="https://twitter.com/ultralytics" style="text-decoration:none;">

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-twitter.png" width="2%" alt="" /></a>

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

|

<a href="https://www.producthunt.com/@glenn_jocher" style="text-decoration:none;">

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-producthunt.png" width="2%" alt="" /></a>

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

|

<a href="https://youtube.com/ultralytics" style="text-decoration:none;">

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-youtube.png" width="2%" alt="" /></a>

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

|

<a href="https://www.facebook.com/ultralytics" style="text-decoration:none;">

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-facebook.png" width="2%" alt="" /></a>

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

|

<a href="https://www.instagram.com/ultralytics/" style="text-decoration:none;">

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-instagram.png" width="2%" alt="" /></a>

|

|

</div>

|

|

</div>

|

|

<br>

|

|

|

|

## <div align="center">YOLOv8 🚀 NEW</div>

|

|

|

|

We are thrilled to announce the launch of Ultralytics YOLOv8 🚀, our NEW cutting-edge, state-of-the-art (SOTA) model

|

|

released at **[https://github.com/ultralytics/ultralytics](https://github.com/ultralytics/ultralytics)**.

|

|

YOLOv8 is designed to be fast, accurate, and easy to use, making it an excellent choice for a wide range of

|

|

object detection, image segmentation and image classification tasks.

|

|

|

|

See the [YOLOv8 Docs](https://docs.ultralytics.com) for details and get started with:

|

|

|

|

```commandline

|

|

pip install ultralytics

|

|

```

|

|

|

|

<div align="center">

|

|

<a href="https://ultralytics.com/yolov8" target="_blank">

|

|

<img width="100%" src="https://raw.githubusercontent.com/ultralytics/assets/main/yolov8/yolo-comparison-plots.png"></a>

|

|

</div>

|

|

|

|

## <div align="center">Documentation</div>

|

|

|

|

See the [ Docs](https://docs.ultralytics.com) for full documentation on training, testing and deployment. See below for

|

|

quickstart examples.

|

|

|

|

<details open>

|

|

<summary>Install</summary>

|

|

|

|

Clone repo and install [requirements.txt](https://github.com/ultralytics/yolov5/blob/master/requirements.txt) in a

|

|

[**Python>=3.7.0**](https://www.python.org/) environment, including

|

|

[**PyTorch>=1.7**](https://pytorch.org/get-started/locally/).

|

|

|

|

```bash

|

|

git clone https://github.com/ultralytics/yolov3 # clone

|

|

cd yolov3

|

|

pip install -r requirements.txt # install

|

|

```

|

|

|

|

</details>

|

|

|

|

<details>

|

|

<summary>Inference</summary>

|

|

|

|

[PyTorch Hub](https://github.com/ultralytics/yolov5/issues/36)

|

|

inference. [Models](https://github.com/ultralytics/yolov5/tree/master/models) download automatically from the latest

|

|

[release](https://github.com/ultralytics/yolov5/releases).

|

|

|

|

```python

|

|

import torch

|

|

|

|

# Model

|

|

model = torch.hub.load(

|

|

"ultralytics/yolov3", "yolov3"

|

|

) # or yolov3-spp, yolov3-tiny, custom

|

|

|

|

# Images

|

|

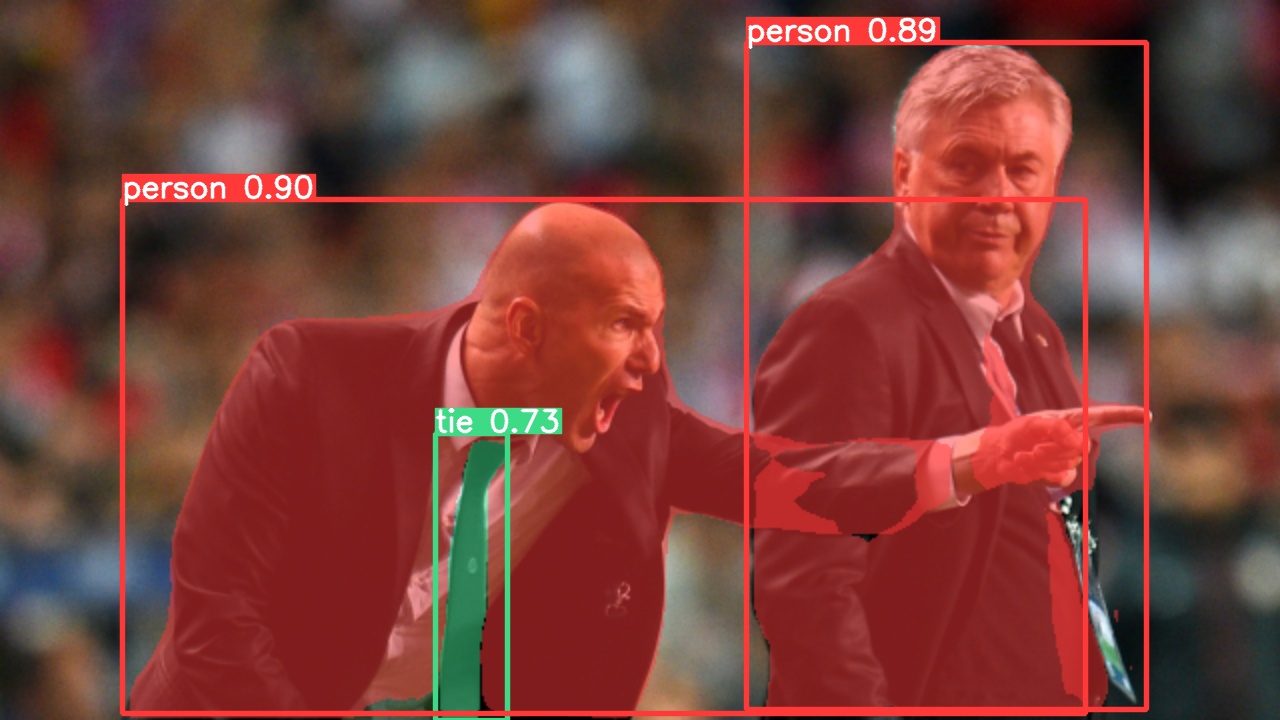

img = "https://ultralytics.com/images/zidane.jpg" # or file, Path, PIL, OpenCV, numpy, list

|

|

|

|

# Inference

|

|

results = model(img)

|

|

|

|

# Results

|

|

results.print() # or .show(), .save(), .crop(), .pandas(), etc.

|

|

```

|

|

|

|

</details>

|

|

|

|

<details>

|

|

<summary>Inference with detect.py</summary>

|

|

|

|

`detect.py` runs inference on a variety of sources,

|

|

downloading [models](https://github.com/ultralytics/yolov5/tree/master/models) automatically from

|

|

the latest [release](https://github.com/ultralytics/yolov5/releases) and saving results to `runs/detect`.

|

|

|

|

```bash

|

|

python detect.py --weights yolov5s.pt --source 0 # webcam

|

|

img.jpg # image

|

|

vid.mp4 # video

|

|

screen # screenshot

|

|

path/ # directory

|

|

list.txt # list of images

|

|

list.streams # list of streams

|

|

'path/*.jpg' # glob

|

|

'https://youtu.be/Zgi9g1ksQHc' # YouTube

|

|

'rtsp://example.com/media.mp4' # RTSP, RTMP, HTTP stream

|

|

```

|

|

|

|

</details>

|

|

|

|

<details>

|

|

<summary>Training</summary>

|

|

|

|

The commands below reproduce [COCO](https://github.com/ultralytics/yolov5/blob/master/data/scripts/get_coco.sh)

|

|

results. [Models](https://github.com/ultralytics/yolov5/tree/master/models)

|

|

and [datasets](https://github.com/ultralytics/yolov5/tree/master/data) download automatically from the latest

|

|

[release](https://github.com/ultralytics/yolov5/releases). Training times for YOLOv5n/s/m/l/x are

|

|

1/2/4/6/8 days on a V100 GPU ([Multi-GPU](https://github.com/ultralytics/yolov5/issues/475) times faster). Use the

|

|

largest `--batch-size` possible, or pass `--batch-size -1` for

|

|

[AutoBatch](https://github.com/ultralytics/yolov5/pull/5092). Batch sizes shown for V100-16GB.

|

|

|

|

```bash

|

|

python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5n.yaml --batch-size 128

|

|

yolov5s 64

|

|

yolov5m 40

|

|

yolov5l 24

|

|

yolov5x 16

|

|

```

|

|

|

|

<img width="800" src="https://user-images.githubusercontent.com/26833433/90222759-949d8800-ddc1-11ea-9fa1-1c97eed2b963.png">

|

|

|

|

</details>

|

|

|

|

<details open>

|

|

<summary>Tutorials</summary>

|

|

|

|

- [Train Custom Data](https://github.com/ultralytics/yolov5/wiki/Train-Custom-Data)🚀 RECOMMENDED

|

|

- [Tips for Best Training Results](https://github.com/ultralytics/yolov5/wiki/Tips-for-Best-Training-Results)☘️

|

|

RECOMMENDED

|

|

- [Multi-GPU Training](https://github.com/ultralytics/yolov5/issues/475)

|

|

- [PyTorch Hub](https://github.com/ultralytics/yolov5/issues/36) 🌟 NEW

|

|

- [TFLite, ONNX, CoreML, TensorRT Export](https://github.com/ultralytics/yolov5/issues/251) 🚀

|

|

- [NVIDIA Jetson Nano Deployment](https://github.com/ultralytics/yolov5/issues/9627) 🌟 NEW

|

|

- [Test-Time Augmentation (TTA)](https://github.com/ultralytics/yolov5/issues/303)

|

|

- [Model Ensembling](https://github.com/ultralytics/yolov5/issues/318)

|

|

- [Model Pruning/Sparsity](https://github.com/ultralytics/yolov5/issues/304)

|

|

- [Hyperparameter Evolution](https://github.com/ultralytics/yolov5/issues/607)

|

|

- [Transfer Learning with Frozen Layers](https://github.com/ultralytics/yolov5/issues/1314)

|

|

- [Architecture Summary](https://github.com/ultralytics/yolov5/issues/6998) 🌟 NEW

|

|

- [Roboflow for Datasets, Labeling, and Active Learning](https://github.com/ultralytics/yolov5/issues/4975)🌟 NEW

|

|

- [ClearML Logging](https://github.com/ultralytics/yolov5/tree/master/utils/loggers/clearml) 🌟 NEW

|

|

- [ with Neural Magic's Deepsparse](https://bit.ly/yolov5-neuralmagic) 🌟 NEW

|

|

- [Comet Logging](https://github.com/ultralytics/yolov5/tree/master/utils/loggers/comet) 🌟 NEW

|

|

|

|

</details>

|

|

|

|

## <div align="center">Integrations</div>

|

|

|

|

<br>

|

|

<a align="center" href="https://bit.ly/ultralytics_hub" target="_blank">

|

|

<img width="100%" src="https://github.com/ultralytics/assets/raw/main/im/integrations-loop.png"></a>

|

|

<br>

|

|

<br>

|

|

|

|

<div align="center">

|

|

<a href="https://roboflow.com/?ref=ultralytics">

|

|

<img src="https://github.com/ultralytics/assets/raw/main/partners/logo-roboflow.png" width="10%" /></a>

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="15%" height="0" alt="" />

|

|

<a href="https://cutt.ly/yolov5-readme-clearml">

|

|

<img src="https://github.com/ultralytics/assets/raw/main/partners/logo-clearml.png" width="10%" /></a>

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="15%" height="0" alt="" />

|

|

<a href="https://bit.ly/yolov5-readme-comet">

|

|

<img src="https://github.com/ultralytics/assets/raw/main/partners/logo-comet.png" width="10%" /></a>

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="15%" height="0" alt="" />

|

|

<a href="https://bit.ly/yolov5-neuralmagic">

|

|

<img src="https://github.com/ultralytics/assets/raw/main/partners/logo-neuralmagic.png" width="10%" /></a>

|

|

</div>

|

|

|

|

| Roboflow | ClearML ⭐ NEW | Comet ⭐ NEW | Neural Magic ⭐ NEW |

|

|

| :--------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------------------------------: | :--------------------------------------------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------: |

|

|

| Label and export your custom datasets directly to for training with [Roboflow](https://roboflow.com/?ref=ultralytics) | Automatically track, visualize and even remotely train using [ClearML](https://cutt.ly/yolov5-readme-clearml) (open-source!) | Free forever, [Comet](https://bit.ly/yolov5-readme-comet2) lets you save models, resume training, and interactively visualise and debug predictions | Run inference up to 6x faster with [Neural Magic DeepSparse](https://bit.ly/yolov5-neuralmagic) |

|

|

|

|

## <div align="center">Ultralytics HUB</div>

|

|

|

|

[Ultralytics HUB](https://bit.ly/ultralytics_hub) is our ⭐ **NEW** no-code solution to visualize datasets, train 🚀

|

|

models, and deploy to the real world in a seamless experience. Get started for **Free** now!

|

|

|

|

<a align="center" href="https://bit.ly/ultralytics_hub" target="_blank">

|

|

<img width="100%" src="https://github.com/ultralytics/assets/raw/main/im/ultralytics-hub.png"></a>

|

|

|

|

## <div align="center">Why YOLO</div>

|

|

|

|

has been designed to be super easy to get started and simple to learn. We prioritize real-world results.

|

|

|

|

<p align="left"><img width="800" src="https://user-images.githubusercontent.com/26833433/155040763-93c22a27-347c-4e3c-847a-8094621d3f4e.png"></p>

|

|

<details>

|

|

<summary>YOLOv5-P5 640 Figure</summary>

|

|

|

|

<p align="left"><img width="800" src="https://user-images.githubusercontent.com/26833433/155040757-ce0934a3-06a6-43dc-a979-2edbbd69ea0e.png"></p>

|

|

</details>

|

|

<details>

|

|

<summary>Figure Notes</summary>

|

|

|

|

- **COCO AP val** denotes mAP@0.5:0.95 metric measured on the 5000-image [COCO val2017](http://cocodataset.org) dataset

|

|

over various inference sizes from 256 to 1536.

|

|

- **GPU Speed** measures average inference time per image on [COCO val2017](http://cocodataset.org) dataset using

|

|

a [AWS p3.2xlarge](https://aws.amazon.com/ec2/instance-types/p3/) V100 instance at batch-size 32.

|

|

- **EfficientDet** data from [google/automl](https://github.com/google/automl) at batch size 8.

|

|

- **Reproduce**

|

|

by `python val.py --task study --data coco.yaml --iou 0.7 --weights yolov5n6.pt yolov5s6.pt yolov5m6.pt yolov5l6.pt yolov5x6.pt`

|

|

|

|

</details>

|

|

|

|

### Pretrained Checkpoints

|

|

|

|

| Model | size<br><sup>(pixels) | mAP<sup>val<br>50-95 | mAP<sup>val<br>50 | Speed<br><sup>CPU b1<br>(ms) | Speed<br><sup>V100 b1<br>(ms) | Speed<br><sup>V100 b32<br>(ms) | params<br><sup>(M) | FLOPs<br><sup>@640 (B) |

|

|

| ----------------------------------------------------------------------------------------------- | --------------------- | -------------------- | ----------------- | ---------------------------- | ----------------------------- | ------------------------------ | ------------------ | ---------------------- |

|

|

| [YOLOv5n](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5n.pt) | 640 | 28.0 | 45.7 | **45** | **6.3** | **0.6** | **1.9** | **4.5** |

|

|

| [YOLOv5s](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5s.pt) | 640 | 37.4 | 56.8 | 98 | 6.4 | 0.9 | 7.2 | 16.5 |

|

|

| [YOLOv5m](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5m.pt) | 640 | 45.4 | 64.1 | 224 | 8.2 | 1.7 | 21.2 | 49.0 |

|

|

| [YOLOv5l](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5l.pt) | 640 | 49.0 | 67.3 | 430 | 10.1 | 2.7 | 46.5 | 109.1 |

|

|

| [YOLOv5x](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5x.pt) | 640 | 50.7 | 68.9 | 766 | 12.1 | 4.8 | 86.7 | 205.7 |

|

|

| | | | | | | | | |

|

|

| [YOLOv5n6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5n6.pt) | 1280 | 36.0 | 54.4 | 153 | 8.1 | 2.1 | 3.2 | 4.6 |

|

|

| [YOLOv5s6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5s6.pt) | 1280 | 44.8 | 63.7 | 385 | 8.2 | 3.6 | 12.6 | 16.8 |

|

|

| [YOLOv5m6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5m6.pt) | 1280 | 51.3 | 69.3 | 887 | 11.1 | 6.8 | 35.7 | 50.0 |

|

|

| [YOLOv5l6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5l6.pt) | 1280 | 53.7 | 71.3 | 1784 | 15.8 | 10.5 | 76.8 | 111.4 |

|

|

| [YOLOv5x6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5x6.pt)<br>+ [TTA] | 1280<br>1536 | 55.0<br>**55.8** | 72.7<br>**72.7** | 3136<br>- | 26.2<br>- | 19.4<br>- | 140.7<br>- | 209.8<br>- |

|

|

|

|

<details>

|

|

<summary>Table Notes</summary>

|

|

|

|

- All checkpoints are trained to 300 epochs with default settings. Nano and Small models

|

|

use [hyp.scratch-low.yaml](https://github.com/ultralytics/yolov5/blob/master/data/hyps/hyp.scratch-low.yaml) hyps, all

|

|

others use [hyp.scratch-high.yaml](https://github.com/ultralytics/yolov5/blob/master/data/hyps/hyp.scratch-high.yaml).

|

|

- **mAP<sup>val</sup>** values are for single-model single-scale on [COCO val2017](http://cocodataset.org) dataset.<br>

|

|

Reproduce by `python val.py --data coco.yaml --img 640 --conf 0.001 --iou 0.65`

|

|

- **Speed** averaged over COCO val images using a [AWS p3.2xlarge](https://aws.amazon.com/ec2/instance-types/p3/)

|

|

instance. NMS times (~1 ms/img) not included.<br>Reproduce

|

|

by `python val.py --data coco.yaml --img 640 --task speed --batch 1`

|

|

- **TTA** [Test Time Augmentation](https://github.com/ultralytics/yolov5/issues/303) includes reflection and scale

|

|

augmentations.<br>Reproduce by `python val.py --data coco.yaml --img 1536 --iou 0.7 --augment`

|

|

|

|

</details>

|

|

|

|

## <div align="center">Segmentation</div>

|

|

|

|

Our new YOLOv5 [release v7.0](https://github.com/ultralytics/yolov5/releases/v7.0) instance segmentation models are the

|

|

fastest and most accurate in the world, beating all

|

|

current [SOTA benchmarks](https://paperswithcode.com/sota/real-time-instance-segmentation-on-mscoco). We've made them

|

|

super simple to train, validate and deploy. See full details in

|

|

our [Release Notes](https://github.com/ultralytics/yolov5/releases/v7.0) and visit

|

|

our [YOLOv5 Segmentation Colab Notebook](https://github.com/ultralytics/yolov5/blob/master/segment/tutorial.ipynb) for

|

|

quickstart tutorials.

|

|

|

|

<details>

|

|

<summary>Segmentation Checkpoints</summary>

|

|

|

|

<div align="center">

|

|

<a align="center" href="https://ultralytics.com/yolov5" target="_blank">

|

|

<img width="800" src="https://user-images.githubusercontent.com/61612323/204180385-84f3aca9-a5e9-43d8-a617-dda7ca12e54a.png"></a>

|

|

</div>

|

|

|

|

We trained YOLOv5 segmentations models on COCO for 300 epochs at image size 640 using A100 GPUs. We exported all models

|

|

to ONNX FP32 for CPU speed tests and to TensorRT FP16 for GPU speed tests. We ran all speed tests on

|

|

Google [Colab Pro](https://colab.research.google.com/signup) notebooks for easy reproducibility.

|

|

|

|

| Model | size<br><sup>(pixels) | mAP<sup>box<br>50-95 | mAP<sup>mask<br>50-95 | Train time<br><sup>300 epochs<br>A100 (hours) | Speed<br><sup>ONNX CPU<br>(ms) | Speed<br><sup>TRT A100<br>(ms) | params<br><sup>(M) | FLOPs<br><sup>@640 (B) |

|

|

| ------------------------------------------------------------------------------------------ | --------------------- | -------------------- | --------------------- | --------------------------------------------- | ------------------------------ | ------------------------------ | ------------------ | ---------------------- |

|

|

| [YOLOv5n-seg](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5n-seg.pt) | 640 | 27.6 | 23.4 | 80:17 | **62.7** | **1.2** | **2.0** | **7.1** |

|

|

| [YOLOv5s-seg](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5s-seg.pt) | 640 | 37.6 | 31.7 | 88:16 | 173.3 | 1.4 | 7.6 | 26.4 |

|

|

| [YOLOv5m-seg](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5m-seg.pt) | 640 | 45.0 | 37.1 | 108:36 | 427.0 | 2.2 | 22.0 | 70.8 |

|

|

| [YOLOv5l-seg](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5l-seg.pt) | 640 | 49.0 | 39.9 | 66:43 (2x) | 857.4 | 2.9 | 47.9 | 147.7 |

|

|

| [YOLOv5x-seg](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5x-seg.pt) | 640 | **50.7** | **41.4** | 62:56 (3x) | 1579.2 | 4.5 | 88.8 | 265.7 |

|

|

|

|

- All checkpoints are trained to 300 epochs with SGD optimizer with `lr0=0.01` and `weight_decay=5e-5` at image size 640

|

|

and all default settings.<br>Runs logged to https://wandb.ai/glenn-jocher/YOLOv5_v70_official

|

|

- **Accuracy** values are for single-model single-scale on COCO dataset.<br>Reproduce

|

|

by `python segment/val.py --data coco.yaml --weights yolov5s-seg.pt`

|

|

- **Speed** averaged over 100 inference images using a [Colab Pro](https://colab.research.google.com/signup) A100

|

|

High-RAM instance. Values indicate inference speed only (NMS adds about 1ms per image). <br>Reproduce

|

|

by `python segment/val.py --data coco.yaml --weights yolov5s-seg.pt --batch 1`

|

|

- **Export** to ONNX at FP32 and TensorRT at FP16 done with `export.py`. <br>Reproduce

|

|

by `python export.py --weights yolov5s-seg.pt --include engine --device 0 --half`

|

|

|

|

</details>

|

|

|

|

<details>

|

|

<summary>Segmentation Usage Examples <a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/segment/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a></summary>

|

|

|

|

### Train

|

|

|

|

YOLOv5 segmentation training supports auto-download COCO128-seg segmentation dataset with `--data coco128-seg.yaml`

|

|

argument and manual download of COCO-segments dataset with `bash data/scripts/get_coco.sh --train --val --segments` and

|

|

then `python train.py --data coco.yaml`.

|

|

|

|

```bash

|

|

# Single-GPU

|

|

python segment/train.py --data coco128-seg.yaml --weights yolov5s-seg.pt --img 640

|

|

|

|

# Multi-GPU DDP

|

|

python -m torch.distributed.run --nproc_per_node 4 --master_port 1 segment/train.py --data coco128-seg.yaml --weights yolov5s-seg.pt --img 640 --device 0,1,2,3

|

|

```

|

|

|

|

### Val

|

|

|

|

Validate YOLOv5s-seg mask mAP on COCO dataset:

|

|

|

|

```bash

|

|

bash data/scripts/get_coco.sh --val --segments # download COCO val segments split (780MB, 5000 images)

|

|

python segment/val.py --weights yolov5s-seg.pt --data coco.yaml --img 640 # validate

|

|

```

|

|

|

|

### Predict

|

|

|

|

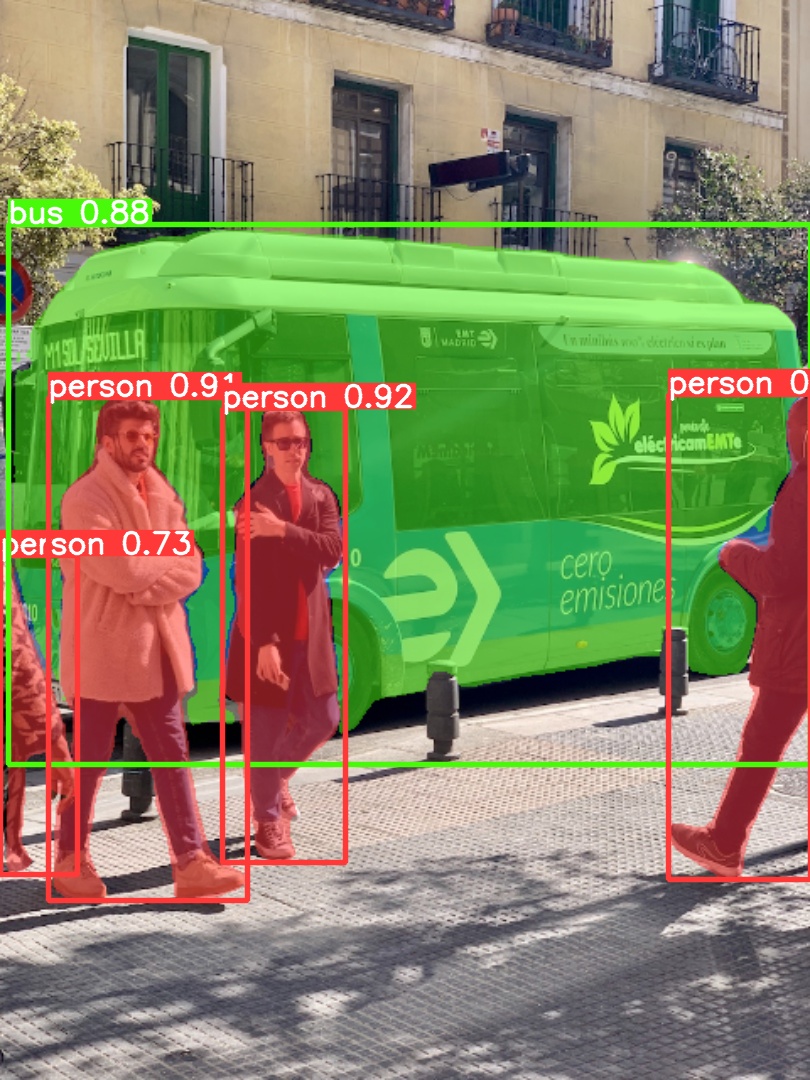

Use pretrained YOLOv5m-seg.pt to predict bus.jpg:

|

|

|

|

```bash

|

|

python segment/predict.py --weights yolov5m-seg.pt --data data/images/bus.jpg

|

|

```

|

|

|

|

```python

|

|

model = torch.hub.load(

|

|

"ultralytics/yolov5", "custom", "yolov5m-seg.pt"

|

|

) # load from PyTorch Hub (WARNING: inference not yet supported)

|

|

```

|

|

|

|

|  |  |

|

|

| ---------------------------------------------------------------------------------------------------------------- | ------------------------------------------------------------------------------------------------------------- |

|

|

|

|

### Export

|

|

|

|

Export YOLOv5s-seg model to ONNX and TensorRT:

|

|

|

|

```bash

|

|

python export.py --weights yolov5s-seg.pt --include onnx engine --img 640 --device 0

|

|

```

|

|

|

|

</details>

|

|

|

|

## <div align="center">Classification</div>

|

|

|

|

YOLOv5 [release v6.2](https://github.com/ultralytics/yolov5/releases) brings support for classification model training,

|

|

validation and deployment! See full details in our [Release Notes](https://github.com/ultralytics/yolov5/releases/v6.2)

|

|

and visit

|

|

our [YOLOv5 Classification Colab Notebook](https://github.com/ultralytics/yolov5/blob/master/classify/tutorial.ipynb)

|

|

for quickstart tutorials.

|

|

|

|

<details>

|

|

<summary>Classification Checkpoints</summary>

|

|

|

|

<br>

|

|

|

|

We trained YOLOv5-cls classification models on ImageNet for 90 epochs using a 4xA100 instance, and we trained ResNet and

|

|

EfficientNet models alongside with the same default training settings to compare. We exported all models to ONNX FP32

|

|

for CPU speed tests and to TensorRT FP16 for GPU speed tests. We ran all speed tests on

|

|

Google [Colab Pro](https://colab.research.google.com/signup) for easy reproducibility.

|

|

|

|

| Model | size<br><sup>(pixels) | acc<br><sup>top1 | acc<br><sup>top5 | Training<br><sup>90 epochs<br>4xA100 (hours) | Speed<br><sup>ONNX CPU<br>(ms) | Speed<br><sup>TensorRT V100<br>(ms) | params<br><sup>(M) | FLOPs<br><sup>@224 (B) |

|

|

| -------------------------------------------------------------------------------------------------- | --------------------- | ---------------- | ---------------- | -------------------------------------------- | ------------------------------ | ----------------------------------- | ------------------ | ---------------------- |

|

|

| [YOLOv5n-cls](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5n-cls.pt) | 224 | 64.6 | 85.4 | 7:59 | **3.3** | **0.5** | **2.5** | **0.5** |

|

|

| [YOLOv5s-cls](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5s-cls.pt) | 224 | 71.5 | 90.2 | 8:09 | 6.6 | 0.6 | 5.4 | 1.4 |

|

|

| [YOLOv5m-cls](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5m-cls.pt) | 224 | 75.9 | 92.9 | 10:06 | 15.5 | 0.9 | 12.9 | 3.9 |

|

|

| [YOLOv5l-cls](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5l-cls.pt) | 224 | 78.0 | 94.0 | 11:56 | 26.9 | 1.4 | 26.5 | 8.5 |

|

|

| [YOLOv5x-cls](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5x-cls.pt) | 224 | **79.0** | **94.4** | 15:04 | 54.3 | 1.8 | 48.1 | 15.9 |

|

|

| | | | | | | | | |

|

|

| [ResNet18](https://github.com/ultralytics/yolov5/releases/download/v7.0/resnet18.pt) | 224 | 70.3 | 89.5 | **6:47** | 11.2 | 0.5 | 11.7 | 3.7 |

|

|

| [ResNet34](https://github.com/ultralytics/yolov5/releases/download/v7.0/resnet34.pt) | 224 | 73.9 | 91.8 | 8:33 | 20.6 | 0.9 | 21.8 | 7.4 |

|

|

| [ResNet50](https://github.com/ultralytics/yolov5/releases/download/v7.0/resnet50.pt) | 224 | 76.8 | 93.4 | 11:10 | 23.4 | 1.0 | 25.6 | 8.5 |

|

|

| [ResNet101](https://github.com/ultralytics/yolov5/releases/download/v7.0/resnet101.pt) | 224 | 78.5 | 94.3 | 17:10 | 42.1 | 1.9 | 44.5 | 15.9 |

|

|

| | | | | | | | | |

|

|

| [EfficientNet_b0](https://github.com/ultralytics/yolov5/releases/download/v7.0/efficientnet_b0.pt) | 224 | 75.1 | 92.4 | 13:03 | 12.5 | 1.3 | 5.3 | 1.0 |

|

|

| [EfficientNet_b1](https://github.com/ultralytics/yolov5/releases/download/v7.0/efficientnet_b1.pt) | 224 | 76.4 | 93.2 | 17:04 | 14.9 | 1.6 | 7.8 | 1.5 |

|

|

| [EfficientNet_b2](https://github.com/ultralytics/yolov5/releases/download/v7.0/efficientnet_b2.pt) | 224 | 76.6 | 93.4 | 17:10 | 15.9 | 1.6 | 9.1 | 1.7 |

|

|

| [EfficientNet_b3](https://github.com/ultralytics/yolov5/releases/download/v7.0/efficientnet_b3.pt) | 224 | 77.7 | 94.0 | 19:19 | 18.9 | 1.9 | 12.2 | 2.4 |

|

|

|

|

<details>

|

|

<summary>Table Notes (click to expand)</summary>

|

|

|

|

- All checkpoints are trained to 90 epochs with SGD optimizer with `lr0=0.001` and `weight_decay=5e-5` at image size 224

|

|

and all default settings.<br>Runs logged to https://wandb.ai/glenn-jocher/YOLOv5-Classifier-v6-2

|

|

- **Accuracy** values are for single-model single-scale on [ImageNet-1k](https://www.image-net.org/index.php)

|

|

dataset.<br>Reproduce by `python classify/val.py --data ../datasets/imagenet --img 224`

|

|

- **Speed** averaged over 100 inference images using a Google [Colab Pro](https://colab.research.google.com/signup) V100

|

|

High-RAM instance.<br>Reproduce by `python classify/val.py --data ../datasets/imagenet --img 224 --batch 1`

|

|

- **Export** to ONNX at FP32 and TensorRT at FP16 done with `export.py`. <br>Reproduce

|

|

by `python export.py --weights yolov5s-cls.pt --include engine onnx --imgsz 224`

|

|

|

|

</details>

|

|

</details>

|

|

|

|

<details>

|

|

<summary>Classification Usage Examples <a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/classify/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a></summary>

|

|

|

|

### Train

|

|

|

|

YOLOv5 classification training supports auto-download of MNIST, Fashion-MNIST, CIFAR10, CIFAR100, Imagenette, Imagewoof,

|

|

and ImageNet datasets with the `--data` argument. To start training on MNIST for example use `--data mnist`.

|

|

|

|

```bash

|

|

# Single-GPU

|

|

python classify/train.py --model yolov5s-cls.pt --data cifar100 --epochs 5 --img 224 --batch 128

|

|

|

|

# Multi-GPU DDP

|

|

python -m torch.distributed.run --nproc_per_node 4 --master_port 1 classify/train.py --model yolov5s-cls.pt --data imagenet --epochs 5 --img 224 --device 0,1,2,3

|

|

```

|

|

|

|

### Val

|

|

|

|

Validate YOLOv5m-cls accuracy on ImageNet-1k dataset:

|

|

|

|

```bash

|

|

bash data/scripts/get_imagenet.sh --val # download ImageNet val split (6.3G, 50000 images)

|

|

python classify/val.py --weights yolov5m-cls.pt --data ../datasets/imagenet --img 224 # validate

|

|

```

|

|

|

|

### Predict

|

|

|

|

Use pretrained YOLOv5s-cls.pt to predict bus.jpg:

|

|

|

|

```bash

|

|

python classify/predict.py --weights yolov5s-cls.pt --data data/images/bus.jpg

|

|

```

|

|

|

|

```python

|

|

model = torch.hub.load(

|

|

"ultralytics/yolov5", "custom", "yolov5s-cls.pt"

|

|

) # load from PyTorch Hub

|

|

```

|

|

|

|

### Export

|

|

|

|

Export a group of trained YOLOv5s-cls, ResNet and EfficientNet models to ONNX and TensorRT:

|

|

|

|

```bash

|

|

python export.py --weights yolov5s-cls.pt resnet50.pt efficientnet_b0.pt --include onnx engine --img 224

|

|

```

|

|

|

|

</details>

|

|

|

|

## <div align="center">Environments</div>

|

|

|

|

Get started in seconds with our verified environments. Click each icon below for details.

|

|

|

|

<div align="center">

|

|

<a href="https://bit.ly/yolov5-paperspace-notebook">

|

|

<img src="https://github.com/ultralytics/yolov5/releases/download/v1.0/logo-gradient.png" width="10%" /></a>

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="5%" alt="" />

|

|

<a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb">

|

|

<img src="https://github.com/ultralytics/yolov5/releases/download/v1.0/logo-colab-small.png" width="10%" /></a>

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="5%" alt="" />

|

|

<a href="https://www.kaggle.com/ultralytics/yolov5">

|

|

<img src="https://github.com/ultralytics/yolov5/releases/download/v1.0/logo-kaggle-small.png" width="10%" /></a>

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="5%" alt="" />

|

|

<a href="https://hub.docker.com/r/ultralytics/yolov3">

|

|

<img src="https://github.com/ultralytics/yolov5/releases/download/v1.0/logo-docker-small.png" width="10%" /></a>

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="5%" alt="" />

|

|

<a href="https://github.com/ultralytics/yolov5/wiki/AWS-Quickstart">

|

|

<img src="https://github.com/ultralytics/yolov5/releases/download/v1.0/logo-aws-small.png" width="10%" /></a>

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="5%" alt="" />

|

|

<a href="https://github.com/ultralytics/yolov5/wiki/GCP-Quickstart">

|

|

<img src="https://github.com/ultralytics/yolov5/releases/download/v1.0/logo-gcp-small.png" width="10%" /></a>

|

|

</div>

|

|

|

|

## <div align="center">Contribute</div>

|

|

|

|

We love your input! We want to make contributing to as easy and transparent as possible. Please see

|

|

our [Contributing Guide](CONTRIBUTING.md) to get started, and fill out

|

|

the [ Survey](https://ultralytics.com/survey?utm_source=github&utm_medium=social&utm_campaign=Survey) to send us

|

|

feedback on your experiences. Thank you to all our contributors!

|

|

|

|

<!-- SVG image from https://opencollective.com/ultralytics/contributors.svg?width=990 -->

|

|

|

|

<a href="https://github.com/ultralytics/yolov5/graphs/contributors">

|

|

<img src="https://github.com/ultralytics/assets/raw/main/im/image-contributors.png" /></a>

|

|

|

|

## <div align="center">License</div>

|

|

|

|

is available under two different licenses:

|

|

|

|

- **GPL-3.0 License**: See [LICENSE](https://github.com/ultralytics/yolov5/blob/master/LICENSE) file for details.

|

|

- **Enterprise License**: Provides greater flexibility for commercial product development without the open-source

|

|

requirements of GPL-3.0. Typical use cases are embedding Ultralytics software and AI models in commercial products and

|

|

applications. Request an Enterprise License at [Ultralytics Licensing](https://ultralytics.com/license).

|

|

|

|

## <div align="center">Contact</div>

|

|

|

|

For bug reports and feature requests please visit [GitHub Issues](https://github.com/ultralytics/yolov5/issues) or

|

|

the [Ultralytics Community Forum](https://community.ultralytics.com/).

|

|

|

|

<br>

|

|

<div align="center">

|

|

<a href="https://github.com/ultralytics" style="text-decoration:none;">

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-github.png" width="3%" alt="" /></a>

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="" />

|

|

<a href="https://www.linkedin.com/company/ultralytics" style="text-decoration:none;">

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-linkedin.png" width="3%" alt="" /></a>

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="" />

|

|

<a href="https://twitter.com/ultralytics" style="text-decoration:none;">

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-twitter.png" width="3%" alt="" /></a>

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="" />

|

|

<a href="https://www.producthunt.com/@glenn_jocher" style="text-decoration:none;">

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-producthunt.png" width="3%" alt="" /></a>

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="" />

|

|

<a href="https://youtube.com/ultralytics" style="text-decoration:none;">

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-youtube.png" width="3%" alt="" /></a>

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="" />

|

|

<a href="https://www.facebook.com/ultralytics" style="text-decoration:none;">

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-facebook.png" width="3%" alt="" /></a>

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="" />

|

|

<a href="https://www.instagram.com/ultralytics/" style="text-decoration:none;">

|

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-instagram.png" width="3%" alt="" /></a>

|

|

</div>

|

|

|

|

[tta]: https://github.com/ultralytics/yolov5/issues/303

|